Image-based maps for robot localization |

|

Dana Cobzas,

Hong Zhang and

Martin Jagersand

|

ReferencesCobzas, D., Jagersand, M. and Zhang, H., A Panoramic Model for Remote Robot Environment Mapping and Predictive Display, International Journal of Robotics and Automation, 20(1):25-34, 2005 Cobzas, D., Jagersand, M. and Zhang, H. A Panoramic Model for Robot Predictive Display, Vision Interface 2003, pp111-118, Best student paper award Cobzas, D., Zhang, H. and Jagersand, M. Image-Based Localization with Depth-Enhanced Image Map, IEEE Conference on Robotics and Automation (ICRA) 2003}, pp1570-1575 Cobzas, D., Zhang, H. and Jagersand, M. A Comparative Analysis of Geometric and Image-Based 3D-2D Registration Algorithms, IEEE Conference on Robotics and Automation (ICRA) 2002, pp2506-2511 Cobzas, D. and Zhang, H. Cylindrical Panoramic Image-Based Model for Robot Localization, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2001, pp1924-1930 Cobzas, D. and Zhang, H. Mobile Robot Localization using Planar Patches and a Stereo Panoramic Model, Vision Interface 2001, pp94-99 Cobzas, D. and Zhang, H. Planar Patch Extraction with Noisy Depth Data, Proc. of Third International Conference on 3-D Digital Imaging and Modeling 2001, pp240-245 Cobzas, D. and Zhang, H. 2D Robot Localization with Image-Based Panoramic Models Using Vertical Line Features, Vision Interface 2000, pp211-216 Cobzas, D. and Zhang, H. Using Image-Based Panoramic Models for 2D Robot Localization, Proc. of Western Computing Graphics Symposium 2000, pp1-7 |

Demos | |

|

An example of rendering using the second model (panorama registered with depth from laser rangefinder) |

DescriptionTraditionally robot navigation has been cast as the problem of modeling a representation of the 2D or 3D navigation space in Cartesian world coordinates. This representation is then used for controlling the motion of a robot. We propose an alternative approach to traditional geometric techniques where a panoramic image representation of the navigation room is matched with the current image provided by a mobile robot to compute its location. This model contains detailed information about the navigation space without explicit 3D reconstruction.

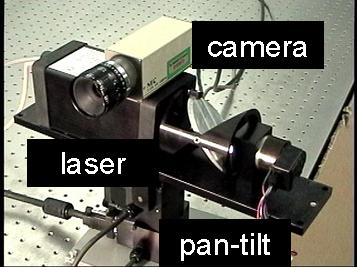

The model is based on a panoramic image mosaic augmented with range information. Having no parallax, the mosaic allows rerendering only from the same viewpoint. To overcome this problem we added depth information and evaluated two types of depth sensing - a stereo vision system and a laser range finder. In the latter case, the image and depth information come from different sensors (camera, laser). As a result, I had to solve the data registration problem . The registered model is segmented into planar pieces that can be reprojected in any position for identifying the robot location.

To demonstrate the applicability of the proposed models for robot navigation, we developed localization algorithms that use an image taken from the current robot position and matches it with the model to compute the actual robot location. The panoramic model with depth data provided by the trinocular device was used to calculate the absolute location of the robot by matching planar patches and vertical lines. A similar approach was adopted for an incremental localization algorithm that uses 3D lines extracted from the registered panoramic model with laser range data. More details on the PhD thesis webpage |

Back to Dana's research page